Automating TikTok content with AI

by Ryan Quinn

Over a year ago, when I first started diving deeper into LLMs like GPT I started thinking about how these tools can empower a developer to create truly hands off systems including for tasks that generally required the "human touch".

In the last couple years, we've seen the proliferation of AI generated content on social media and honestly, most of it is not great quality. While AI can create amazing images and now even videos from nothing, there is still a lot lacking when it comes to storytelling. The same formulaic approach that can make AI so useful also tends to make it's writing dry and professional and it's stories simple and one dimensional. While this is likely to change and with work you can get reasonable results through prompt engineering, we're not quite to a point where AI is going to be producing the next hit sitcom or blockbuster movie.

Despite the limitations I was drawn to the idea of creating an AI powered system that could create short videos all on it's own that wouldn't just further pollute the social media networks with more cookie-cutter videos reading Reddit stories while video of Minecraft gameplay loops on screen.

While AI isn't yet very good at compelling fictional stories, it is quite good at relaying facts and condensing information into a digestible format and length so I decided to leave fiction alone and focus on a system to create videos covering the latest world news. Here's how I went about it.

Modular, not Monolithic

First, I decided that since I was using this project to learn it would make sense to create each step in the process as it's own python script. This allowed me to focus on each piece individually and made debugging considerably easier even if the project folder is a bit more cluttered. First I needed to define the steps:

- Story Selection - First the system needs to actually collect information on current events but it also needs to make decisions on which news stories are important or deserve to be covered. To accomplish this I started by creating a list of major news outlet's public RSS feeds. When this first script runs it collects all the headlines from multiple sources before having our AI evaluate this and determine the top five stories for the day.

- Script Writing - Once we've identified our stories we grab the contents of each of the sources reporting that news item, combine this information with instructions on tone, length, and other information to help ensure that the resulting script is in line with the rest of our content. Based on this, the AI generates our video script.

- Visuals - One major hurdle in this project was what to do about the visual aspect of these generated videos. Since this project began, AI generated video has progressed a ton with amazing clips being shared by companies in the AI space but not only is much of this newest tech not yet available for consumers, it's also likely to be expensive due to the massive amount of compute required.

For the first version of the AI Video pipeline I opted to use stock videos and images from Pixabay and developed a script which would generate keywords based on the story content and use the Pixabay API to choose which videos to use for each story.

This worked but stock videos were often used repeatedly for any story about a similar topic. Because the AI would give preference for the top results from the API the resulting videos were lacking variety.

First, I modified my Pixabay script so that it would also search for images, and if an image was selected, it would apply an animation effect to the image to create that section's video. While this added more options, the AI still had a strong tendency to reuse the same visual content over and over.

After several months I decided to rewrite this script completely, abandoning stock videos and images for AI generation with StableDiffusion. Since StableDiffusion runs locally and the system generates it's videos overnight while I am sleeping it made sense to make use of some unused GPU capacity and generate our own images. I tested several SD models, finding one that provided consistent results and configured an aspect ratio and size that would allow me to apply scrolling effects to the images to generate our video files. While every now and then the script will spit out truly weird images it's generally been very reliable. - SSML - SSML is a markup language for speech synthesis. It allows the specification of various tags to control the tone and cadence of generated speech. While no longer in use in my pipeline, the system includes a tool which takes our generated script and creates an SSML version. Ultimately the differences in audio quality weren't huge and OpenAI's models were not good at avoiding SSML tags that were not supported by AWS so it was disabled.

- Narration - When creating the voice generation script I would love to have been able to use ElevenLabs as I have yet to find speech synthesis that can match it's realism, but you get what you pay for and ElevenLabs is rather expensive. Instead I chose to use Amazon Polly which offers a decent selection of voices. Initially this used the SSML markdown the earlier step generated but now that the SSML step is skipped it simply uses the base script.

- Visuals (Animation) - In this step our script takes the image generated by stable diffusion for each story and crops and animates it using the moviepy library to make the visual side of things a bit more compelling. This step uses the duration of the narration audio files generated by the earlier step to ensure that the videos created are of the same duration. For source images it creates it's animation for the desired length and for stock videos it trims or loops the video to achieve the desired duration.

- Subtitles - I wanted to incorporate subtitles in my videos to make them more accessible and engaging. Lacking timestamped subtitle files based on our narration audio I chose to take a more basic approach. The script is split up into sentences short enough to be displayed on screen and evenly divides the duration of the clip by the number of sentences. The subtitles sometimes fall out of sync with the narration but as most of these clips are less than 60 seconds there isn't enough time for things to drift very far. Once split up, the subtitles are overlaid on each video clip using moviepy.

- Hashtags - In the first few weeks running this pipeline daily I was manually creating descriptions and hashtags for each video. This was the opposite of the hands off automation I was going for so I added a step which will generate a list of hashtags for each story. Getting GPT to stop creating long hashtags made of multiple words and stick to more basic concepts took some tweaking and it will still come up with some truly terrible hashtags at times but after some tuning those instances are fairly rare.

- Putting it all together - After all that we have a set of short video clips, each with original visuals created by StableDiffusion, narration via Amazon Polly, and subtitles. The final touches are handled by this last step. This script randomly chooses the backing music track from a folder with several options then it adds an opening card (which gets the day's date overlaid on it), closing screen, and puts it all together into a finished video.

Editorial - The one human step

While this pipeline is reliable, creating consistent and informative content, it's not perfect. Sometimes the AI will get weird. It might generate really weird visuals for a video or decide to ignore it's style prompts and write scripts that don't make much sense. On a couple occasions, on stories from a Ukrainian news source reporting the Ukranian Military's reported numbers on Russian casualties it reported that information as coming from the Russian military. If the goal is providing trustworthy information then putting a human in the mix if only to check the AI's work was important.

Since starting this project about a year ago I have gained a new morning routine. I wake up, grab a coffee and watch the news, only the news reports I am watching were created by my computer while I slept. At around 2AM each day the system kicks off it's work and by the time I get up I have twenty videos across four categories to review. Through trial and error I've learned that the videos perform best when no more than 2-3 are posted each day so it's my task to choose which ones to post and, having watched them all, I've also verified that they are accurate and of decent quality.

What's next?

Aside from exploring potential commercial uses for the system, perhaps by automatically generating short form videos based on blog posts by a company, I hope to continue to expand the capabilities of the system and, hopefully, eventually get to the point where I feel confident in adapting the system to write fiction.

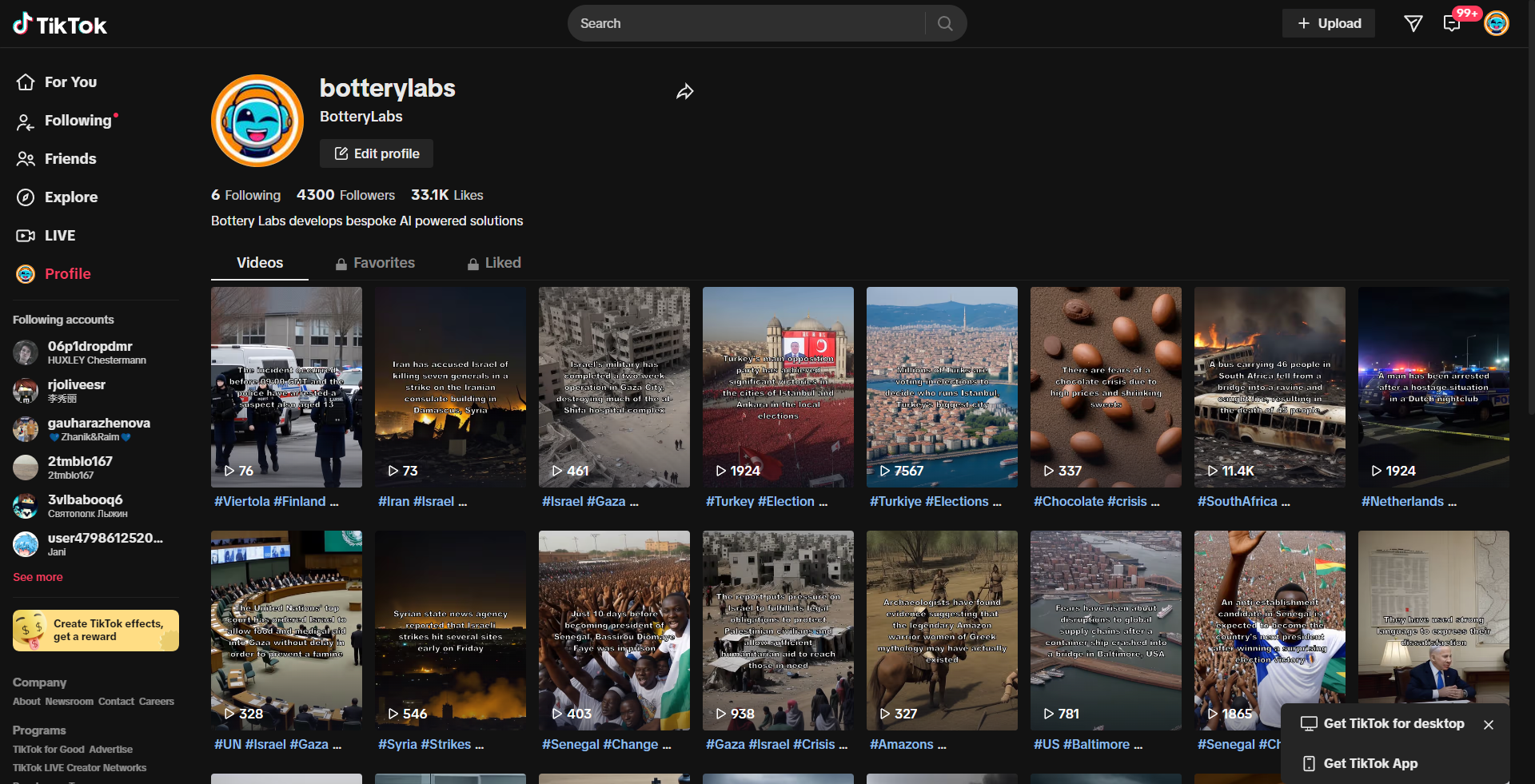

Check it out

If you want to check out the videos created by this platform you can check out the profile on TikTok here: https://www.tiktok.com/@botterylabs

If you would like to work with me on your project or want to inquire about commercial adaptation of the AI Video Pipeline system, send me an email: ryanpq@gmail.com